It's been a long little while, but things are finally reaching the point where I feel sufficiently satisfied to talk about it. (Plus, I've got time available now to do just that).

Life started with a rack full of brand-new Sun hardware, all wired in, mostly powered off. Thanks to mrz and Justin for all the heavy lifting!

When I was handed the 'keys', (actually, I handed my own ssh key) one of the Sun 4150s was pre-installed with CentOS. I was in!

I'll skip over an interesting learning experience with fancy Cisco switches and firewalls, maybe I'll talk about it some other time.

The first step was getting things to the point where they could boot and do something usefull. It's always possible to use remote management tools to trick the BIOS into booting from some disk image over the network, but it's very non-standard and usually requires unscriptable Java based tools, yuck.

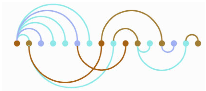

This is where Cobbler comes in and saves the day. In short, it's an amazing tool, out of Redhat"s labs, that allows for the easy building and managment of a complete remote boot and installation solution. With it, you can easily configure systems by MAC addresses, pick what distribution you want to install on it, and you are done. Once setup, you just need to turn on the server you need, and within minutes, you have a freshly installed server, with all the specific installation instructions you wanted. The best with this is that it's 100% reproducible. You need 10 machines installed all the same, easy, just cut-n-paste their MAC addresses, turn them all on, go get a cofee, and you've got 10 identical machines. You want to re-install a system for a reason or another? Same thing. Configure it for a reinstall in cobbler and reboot the system.

It's very handy, and once you put all it's configuration in source-control, your entire server farm is easily reproductible.

Of course, having a bunch of servers all running a pristine Linux distribution isn't that usefull. You need to get them configured in various ways to actually get them to do the work you need them to do. In my particular case, first I needed basic configuration (networking/access control/logging/etc) and second, more importantly, I needed some virtual machines running to host some needed test services.

It's always possible to just login to servers and start installing packages, editing configuration files, starting services, etc. But it's such a bad idea. Even if you are a documentation fanatic, you'll always miss a step or two, and rebuilding a system exactly the way it was becomes impossible.

That's what tools like Puppet are for. They are an automated way to describe what you want on a system and perform the necessary tasks to get the system there. So, instead of installing the ntpd package, hand-editing /etc/ntp.conf and starting the ntp service yourself, you let Puppet do it for you.

Of course, it's also all in source-control, so every single change also gets tracked. The tricky part about systems like Puppet is that they are declarative. So, in the example above, you describe things much differently. You say, /etc/ntpd.conf needs to exist and needs this content. The package ntpd needs to be installed. The service ntpd : needs the ntpd package, the /etc/ntpd.conf file, and needs to be running.

Then Puppet figures what to do, in what order, and also will know that the running state of the ntpd service depends on the content of the /etc/ntpd.conf file, so if that changes, the service needs to be restarted.

There are many such systems out there, like cfengine and bcfg2. I've picked Puppet because it's very actively developped right now, plus supports Linux, Solaris & Mac OSX. The only downside to me is that it's all written in Ruby, and my Ruby-foo is pretty weak. Not a very big deal, because for most normal stuff, you deal with a configuration language, not the actual Ruby language.

At the end of last week, I had all the pieces in place, and I was finally able to directly help out Thunderbird development.

Somebody on #maildev needed access to some live IMAP servers that understood the CONDSTORE extension to test some stuff. With all these great tools already in place and working, it took very little time to get a new virtual machine configured, booted, installed and running some brand-new IMAP servers with support for CONDSTORE.

It's a small step, but it's really nice to finally get to directly contribute (even if in a small way) to the continuing success of Tunderbird.

There is still a lot of work to get done, things are not as automated as I would want them, and I've got some important missing pieces still dangling. For instance, there is no monitoring in place yet, so let's hope nothing goes down when I am not looking.

This coming week, the objective is to get a new mini-build-farm up and running, building Thunderbid continuously on Linux, Windows & OS X. While that's going on, I'll continue laying the missing bricks to the infrastructure itself.

That's enough for now.